Hive2.0以后,使用了新的API来读写ORC文件(https://orc.apache.org)。

本文中的代码,在本地使用Java程序生成ORC文件,然后加载到Hive表。

代码如下:

package com.lxw1234.hive.orc;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.hive.ql.exec.vector.BytesColumnVector;

import org.apache.hadoop.hive.ql.exec.vector.VectorizedRowBatch;

import org.apache.orc.CompressionKind;

import org.apache.orc.OrcFile;

import org.apache.orc.TypeDescription;

import org.apache.orc.Writer;

public class TestORCWriter {

public static void main(String[] args) throws Exception {

//定义ORC数据结构,即表结构

// CREATE TABLE lxw_orc1 (

// field1 STRING,

// field2 STRING,

// field3 STRING

// ) stored AS orc;

TypeDescription schema = TypeDescription.createStruct()

.addField("field1", TypeDescription.createString())

.addField("field2", TypeDescription.createString())

.addField("field3", TypeDescription.createString());

//输出ORC文件本地绝对路径

String lxw_orc1_file = "/tmp/lxw_orc1_file.orc";

Configuration conf = new Configuration();

FileSystem.getLocal(conf);

Writer writer = OrcFile.createWriter(new Path(lxw_orc1_file),

OrcFile.writerOptions(conf)

.setSchema(schema)

.stripeSize(67108864)

.bufferSize(131072)

.blockSize(134217728)

.compress(CompressionKind.ZLIB)

.version(OrcFile.Version.V_0_12));

//要写入的内容

String[] contents = new String[]{"1,a,aa","2,b,bb","3,c,cc","4,d,dd"};

VectorizedRowBatch batch = schema.createRowBatch();

for(String content : contents) {

int rowCount = batch.size++;

String[] logs = content.split(",", -1);

for(int i=0; i<logs.length; i++) {

((BytesColumnVector) batch.cols[i]).setVal(rowCount, logs[i].getBytes());

//batch full

if (batch.size == batch.getMaxSize()) {

writer.addRowBatch(batch);

batch.reset();

}

}

}

writer.addRowBatch(batch);

writer.close();

}

}

将该程序打成普通jar包:orcwriter.jar

另外,本地使用Java程序执行依赖的Jar包有:

commons-collections-3.2.1.jar hadoop-auth-2.3.0-cdh5.0.0.jar hive-exec-2.1.0.jar slf4j-log4j12-1.7.7.jar commons-configuration-1.6.jar hadoop-common-2.3.0-cdh5.0.0.jar log4j-1.2.16.jar commons-logging-1.1.1.jar hadoop-hdfs-2.3.0-cdh5.0.0.jar slf4j-api-1.7.7.jar

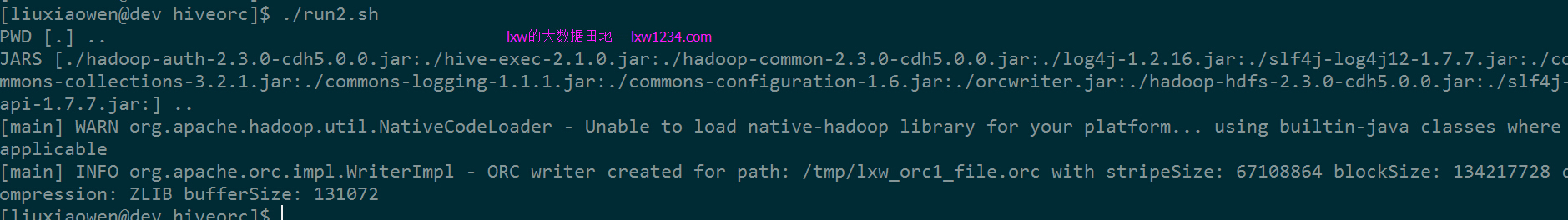

run2.sh中封装了执行命令:

#!/bin/bash

PWD=$(dirname ${0})

echo "PWD [${PWD}] .."

JARS=`find -L "${PWD}" -name '*.jar' -printf '%p:'`

echo "JARS [${JARS}] .."

$JAVA_HOME/bin/java -cp ${JARS} com.lxw1234.hive.orc.TestORCWriter $*

执行./run2.sh

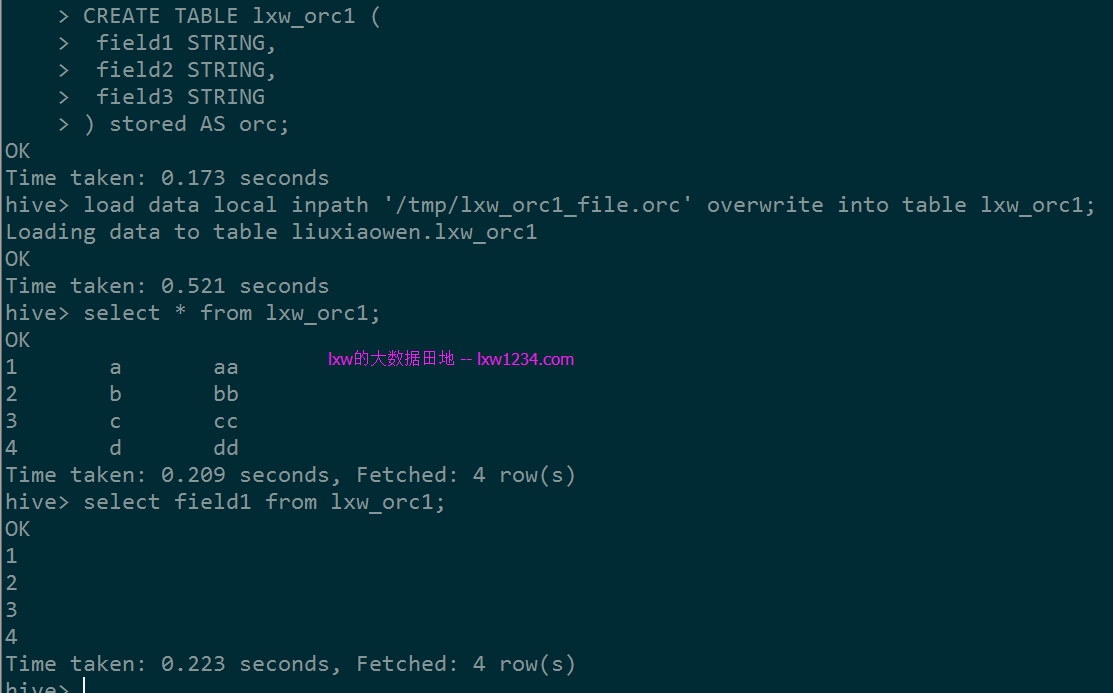

在Hive中建表并LOAD数据:

可以看到,生成的ORC文件可以正常使用。

大多情况下,还是建议在Hive中将文本文件转成ORC格式,这种用JAVA在本地生成ORC文件,属于特殊需求场景。

抱歉,只有对本文发表过评论才能阅读隐藏内容。

如果觉得本博客对您有帮助,请 赞助作者 。

转载请注明:lxw的大数据田地 » Java写本地ORC文件(Hive2 API)